For a chatbot to generate proper responses, it should have both short-term and long-term memories, so that the chatbot can associate the relationship between the past and the current conversation in the response. One of the major challenges in this project is to effectively associate the relationship between a user query and the previous dialogue. We solve this problem by using query-content attentions. In addition, common practice for chatbot training is to feed as much data as the model can take which increases time cost for data collection/annotation and computational cost for model training. To reduce such cost, we employ few-shot learning in chatbot training that requires less data to train a model. Low amount of training data means a significant reduction in the costs related to data collection/annotation and the computational costs.

Uniqueness and Competitive Advantages:

-

Able to handle multi-turn dialogue

-

Able to handle multi-context conversation

-

Able to extend to multi-domain based on few shot learning mechanism

-

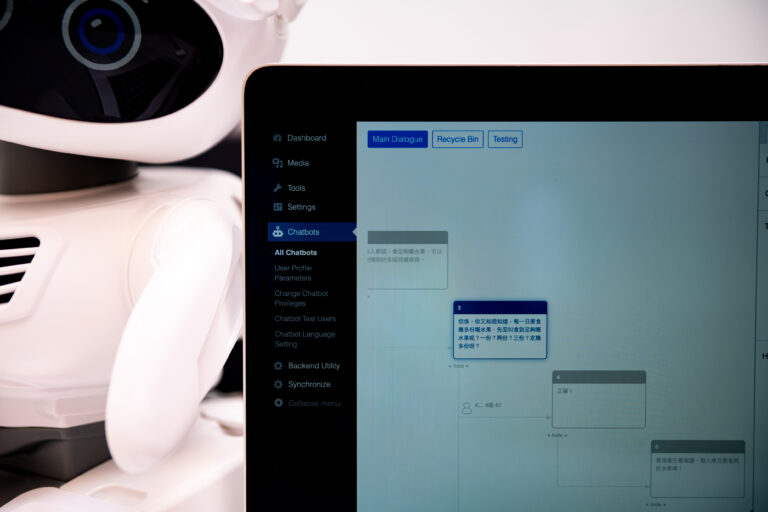

Easily customizable without coding